A deep dive into building a white-label SaaS health platform with AI-powered lab analysis, tiered model routing, and per-clinic customization — from architecture decisions to production deployment.

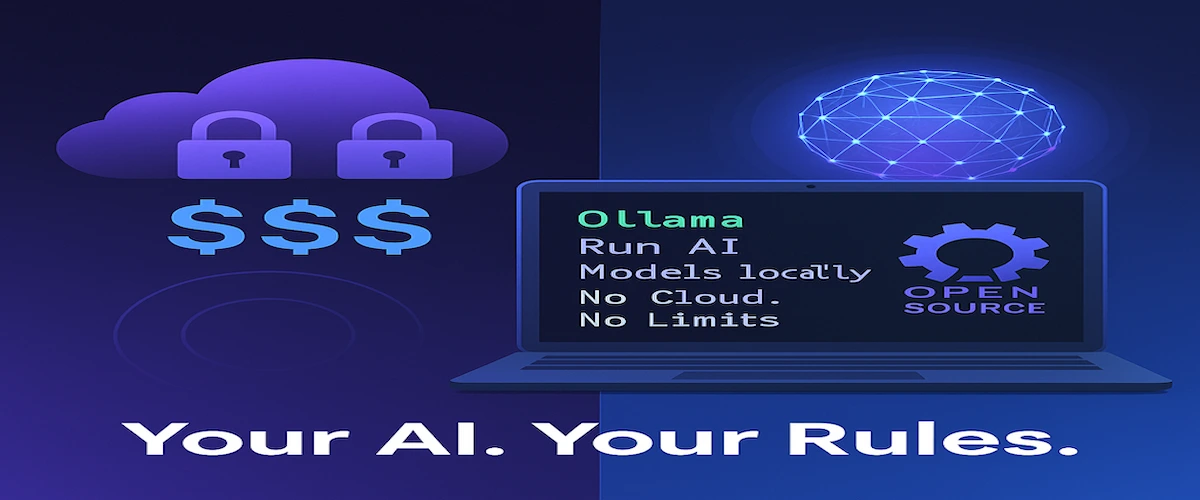

Artificial Intelligence has rapidly become essential across various industries, transforming how we interact with technology and process vast amounts of data. Yet, as we embrace these powerful AI solutions, new concerns about data security, latency, and costs associated with cloud-based platforms are emerging. While cloud AI services like OpenAI or Anthropic offer immense capability, they also come with inherent risks—such as increased vulnerability to data breaches and substantial operational expenses.

In response to these challenges, Ollama steps into the spotlight as a compelling solution. This innovative, open-source platform allows you to run large language models (LLMs) directly on your own hardware, keeping your data secure, reducing latency, and dramatically cutting costs. Particularly beneficial for sensitive sectors like healthcare and finance, Ollama grants organizations and individual developers unprecedented control and customization.

Throughout this article, we’ll dive deeper into Ollama—exploring its core technology, standout features, practical examples, and industry-specific applications. We’ll also reflect on why this shift toward local AI execution matters, and how Ollama might influence the trajectory of artificial intelligence going forward.

This innovative platform enables users to run open-source models such as LLaMA 3, DeepSeek R1, Mistral, and Qwen on your local machine using simple commands.With a focus on local execution, Ollama offers a secure alternative to cloud-based services, ensuring that data processing and model deployment occur directly on the user's machine. This strategy not only enhances data privacy but also optimizes performance by reducing reliance on third-party servers. Visit ollama.com for more information.

At its core, Ollama capitalizes on a robust architecture that seamlessly integrates with multiple operating systems, facilitating efficient deployment and management of LLMs. By allowing direct execution of models on user devices, Ollama minimizes latency—a common issue associated with cloud computing. The platform's infrastructure is designed for optimal resource utilization, which effectively balances computational loads and enhances both responsiveness and overall performance.

To achieve this, Ollama employs an innovative approach to memory management and parallel processing. The technology leverages local GPUs where available, allowing efficient handling of complex language models without compromising on speed or accuracy. Additionally, Ollama's modular design supports easy updates and integration with emerging AI technologies, ensuring its relevance in a rapidly changing landscape.

Ollama supports a wide range of open-source models. This flexibility allows you to choose the right model for your specific use case, balancing performance, size, and capabilities. Popular choices include:

Choosing the right model often involves considering the task at hand, available hardware resources, and desired output quality. Ollama makes it easy to experiment with different models.

A distinct feature of Ollama is local deployment, allowing users to download and execute advanced models on their devices, maintaining data privacy and user control. This is particularly advantageous in sectors such as finance and healthcare, where data confidentiality is critical. Find out more at ollama.com.

Example Applications

Take, for example, a healthcare organization needing rapid, secure processing of patient data. Implementing Ollama enables all data to remain in-house, complying with legal and regulatory frameworks such as HIPAA.

Ollama supports various operating systems, including macOS, Linux, and Windows, ensuring smooth integration across different development environments. This compatibility makes it a versatile tool for individual developers and large enterprises alike.

This flexibility is particularly beneficial for organizations with diverse technological ecosystems, allowing seamless transitions between different computational environments without significant restructuring.

Ollama features an intuitive user interface complemented by powerful command-line tools, catering to a diverse range of users. This dual approach allows technical users to engage with command-line interactions while providing non-technical users with a graphical interface for ease of use. Visit aiplesk.com for more details.

Practical Implementation

In academia, researchers benefit from command-line tools for research, while businesses can rely on the graphical UI for customer-facing applications, eliminating the need for complex programming tasks. For example, in a university setting, researchers can deploy and test new language models with minimal setup time, focusing more on innovation and less on technical hurdles. Meanwhile, in a corporate environment, employees can leverage the user-friendly interface for routine tasks, enhancing productivity without needing deep technical expertise.

One of Ollama's standout features is its ability to operate offline, which is vital for environments with limited connectivity or critical data privacy needs. Visit ollama.org for further information.

Offline functionality provides unmatched advantages, particularly in remote or underserved areas where internet access is unreliable. It also ensures that sensitive data does not leave the premises, aligning with best practices in data security and compliance.

Ollama includes a local API that runs by default on localhost:11434. This lets developers interact with language models from any programming environment via HTTP requests, offering a straightforward way to integrate LLM capabilities into applications.

Here's an example using curl to interact with the API:

For Python developers, Ollama offers official client libraries, simplifying the process of sending requests to the Ollama API and handling responses within Python scripts. This is ideal for building AI-powered applications, automation scripts, or data analysis pipelines.

Here's a basic Python example:

Excited to get Ollama running on your own machine? It's surprisingly quick and straightforward, whether you're on Windows, macOS, or Linux. This guide will walk you through installing the platform, grabbing a cool model like DeepSeek-R1, and running it all locally—no cloud needed!

Before we dive in, let's quickly check if your system is ready for the kinds of models you're looking to play with. Think of it like making sure you have enough fuel for a road trip! Here’s a general idea:

⚠️ Just a friendly reminder: hitting those minimum RAM and GPU requirements is pretty important to keep things running smoothly and avoid any frustrating slowdowns.

First things first, head over to ollama.com and pick the installer for your operating system:

.exe file. Easy peasy..dmg file and follow the familiar setup wizard.✅ After it's installed, you can quickly check if everything's good to go (we'll open a terminal in the next step, but if you're eager):

This should show you the version you just installed.

This is where you'll type in the commands. Here’s how to find it, depending on your system:

Win + R, type cmd, and hit Enter. (PowerShell works great too!)With your terminal open and ready, it's time to download a model. We'll use the 8B (8 billion parameter) version of DeepSeek-R1 for this example, as it's a solid, balanced choice. Type this into your terminal:

📦 Ollama will start fetching the model. It might take a few minutes depending on your internet speed, as these files can be a few gigabytes. While that's downloading, here's a quick look at what those "B" numbers mean and other available versions for DeepSeek-R1:

Once the download finishes, you can fire up an interactive session with the model. In your terminal, type:

Now that you're up and running, here are a couple of pointers for chatting with DeepSeek-R1 (and other models):

With this setup, you’re all set to start exploring the amazing power of local AI, without needing to rely on the cloud. Have fun experimenting!

By processing data locally, Ollama seriously cuts down the risk of sending sensitive info to cloud servers. This is a huge win for staying compliant with tough data protection rules in industries like healthcare and finance. You can get more insights on this at okoone.com.

This "local-first" way of doing things helps organizations meet evolving privacy demands and builds trust by showing a commitment to managing data with integrity.

Running things locally means faster response times compared to cloud solutions, which is vital for any real-time applications. By keeping data processing on your device, Ollama minimizes those frustrating delays that can happen when data has to travel to and from the cloud. More details can be found at okoone.com.

Executing AI models on your own hardware reduces the need for pricey cloud services. Over time, this can lead to big savings on data transfer, storage, and computation costs. For more on this, visit okoone.com.

For organizations watching their budgets, cutting back on subscription-based cloud services can free up significant funds, allowing more investment in innovation and growth.

Ollama gives you complete control over which models you choose, how you customize them, and how you deploy them. This means you can create AI solutions perfectly tailored to your specific needs, adaptable for all sorts of applications. Find out more at okoone.com.

This kind of flexibility is especially great for startups and small businesses that need to adapt quickly and explore new opportunities without being bogged down by high costs or technical roadblocks.

This comparison really shows where Ollama shines. Unlike big cloud platforms like Amazon AWS or Google Cloud, Ollama's local approach means you're less dependent on network infrastructure. This can lead to cost savings and fewer headaches with connectivity, giving you a real competitive edge in fast-paced markets by streamlining access to AI without sacrificing speed or efficiency.

A major reason for the shift towards local AI is security and compliance, especially with regulations like GDPR in Europe and HIPAA in the U.S. These laws demand strict data protection, which local platforms like Ollama naturally support by keeping data exposure to a minimum. For more info, check out okoone.com.

Case Study in Compliance

Think about an EU-based financial institution that needs to follow GDPR. By using Ollama, they can ensure sensitive customer data never leaves their internal systems for external cloud servers. This simplifies compliance and reduces the risks tied to data breaches, meeting legal duties and fostering customer trust.

Edge computing is where local execution really thrives. Ollama's setup can power edge devices to process huge amounts of data with minimal delay. For example, in autonomous vehicles, local AI processing is key for making real-time decisions, allowing vehicles to react instantly to their surroundings without needing a cloud connection.

Case Study: Automotive Sector

In the car industry, manufacturers can use Ollama to develop in-car assistance systems that need immediate data processing. This bypasses cloud delays, boosting safety and user experience. By embedding AI models directly into vehicles, they can also offer personalized features and upgrades, opening new revenue streams.

Different industries are finding unique ways to use Ollama's local execution. In healthcare, it enables secure, on-site analysis of medical images and patient records. The finance sector, on the other hand, uses local AI for fraud detection and customer analytics without exposing sensitive data to cyber threats.

Financial Industry Use Case

A financial firm using Ollama might use LLMs to analyze transaction data, spot anomalies, and improve fraud detection – all within their own secure networks, keeping critical financial data safe.

Beyond industry, Ollama opens up exciting opportunities in education and research. By making advanced AI models accessible locally, schools and universities can bring cutting-edge tech into their classrooms, nurturing a new generation of AI experts. For researchers, Ollama is a fantastic platform for experimenting with new algorithms and conducting real-time analysis, driving innovation.

Ollama is truly a game-changing platform, putting the power of large language models right into users' hands through local execution. By focusing on privacy, performance, and your control, Ollama tackles the big worries that come with cloud-based AI, offering a really strong alternative. Whether you're a developer or looking for industry-specific solutions, Ollama’s rich set of features makes it an incredibly valuable tool in today's AI world. As AI continues to race forward, Ollama is well-positioned to lead the way in how we deploy and manage AI models.

By embracing Ollama, you get to tap into advanced AI capabilities while keeping your data firmly under your control, signaling a new era of secure and efficient AI. This local-first approach doesn't just challenge our reliance on cloud computing; it sets a new standard for future AI innovations.

For any organization or individual wanting to harness AI's power without compromising on security or breaking the bank, Ollama represents a forward-thinking and truly transformative solution.

Ollama's pioneering approach to local AI model deployment is a big step forward in our quest for secure, efficient, and user-controlled AI solutions. Its potential is huge, offering exciting opportunities for innovation and better user experiences across all sorts of fields.

A deep dive into building a white-label SaaS health platform with AI-powered lab analysis, tiered model routing, and per-clinic customization — from architecture decisions to production deployment.

Learn how Agentic AI in healthcare transforms care delivery with AI agents, automation, decision support, patient engagement, risks, compliance, and adoption strategy.